ROLE

Founding Product Designer

TIMELINE

TEAM

2 Designers

4 Engineers

1 Product Manager

TOOLS

Figma

v0

TL;DR

AgentLab is an AI-powered platform for scientists to build and deploy AI agents that automate research workflows. As the founding product designer at MorphMind, I developed a Layered Interaction Model that transforms "black-box" AI into a traceable, hierarchical node map, repositioning AI agents as verifiable lab assets. Beyond product design, I designed the company website and led our inaugural launch workshop with 30+ researchers and industry professionals.

The product was launched in Dec 2025. Try it here: https://agentlab.morphmind.ai

PROBLEM

The "technical wall" in adopting AI in life sciences

In the world of life sciences research, scientists are currently forced into a trade-off between accessibility and accuracy:

Generic AI

Tools like ChatGPT or Claude are easy to use but lack domain expertise and "hallucinate" scientific facts.

Coding Bottlenecks

Platforms like n8n or LangChain are powerful but assume engineering expertise, creating a steep adoption barrier for scientists.

The deeper gap: trust

Through interviews with researchers at Harvard University, I realized that the primary barrier to AI adoption wasn't just a lack of features, but a lack of trust in high-stakes scientific environments.

Scientific Traceability

In most AI tools, users ask a question and receive an answer, but the “how” is buried in generated text. Scientists need an audit trail and the ability to point to exact methods, parameters, and data sources for peer review and compliance.

Design Insight

The interface can't just tell users what the AI does. It has to show the architecture of the logic itself.

Reproducibility

Generic AI is probabilistic and siloed. Workflows die in private chat threads. Scientists need a way to "lock" logic into stable, reusable Standard Operating Procedures (SOPs).

Design Insight

AI agents have to be treated as shareable lab assets, not one-time personal assistants.

Expert-in-the-Loop

Scientists don't want the AI to replace them; they want it to augment their expertise. They need a way to review the AI's "thinking" at a high level while retaining the ability to verify and correct the "doing".

Design Insight

The system needs to expose the AI’s reasoning while preserving human control over critical steps.

SOLUTION

AgentLab: Build, run, and share AI-powered research workflows without coding

Transform user intent into a validated research plan

I designed a conversational UX flow that lets users describe their research workflow in natural language. Rather than executing immediately, the AI proposes a plan for review, ensuring users remain the primary decision-makers and preventing hallucinations.

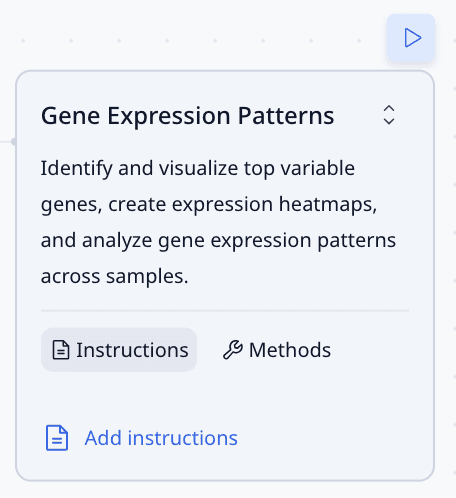

Make AI reasoning visible and traceable

As the agent runs, its workflow materializes in real time as a hierarchical node map. Each node represents a discrete scientific step and includes the instructions and methods, providing users with a traceable audit trail.

Preserve human authority over critical decisions

As visibility alone isn’t enough in high-stakes research, I ensured users can actively intervene. They can inspect generated code, request explanations, or modify individual steps using natural language, all without writing code or restarting the workflow.

Audit results across the entire pipeline

Scientific integrity requires more than just a final answer. The platform captures and surfaces data at every step, allowing users to review the final result or drill into step-level outputs to validate findings, support peer review, and extract supplemental data.

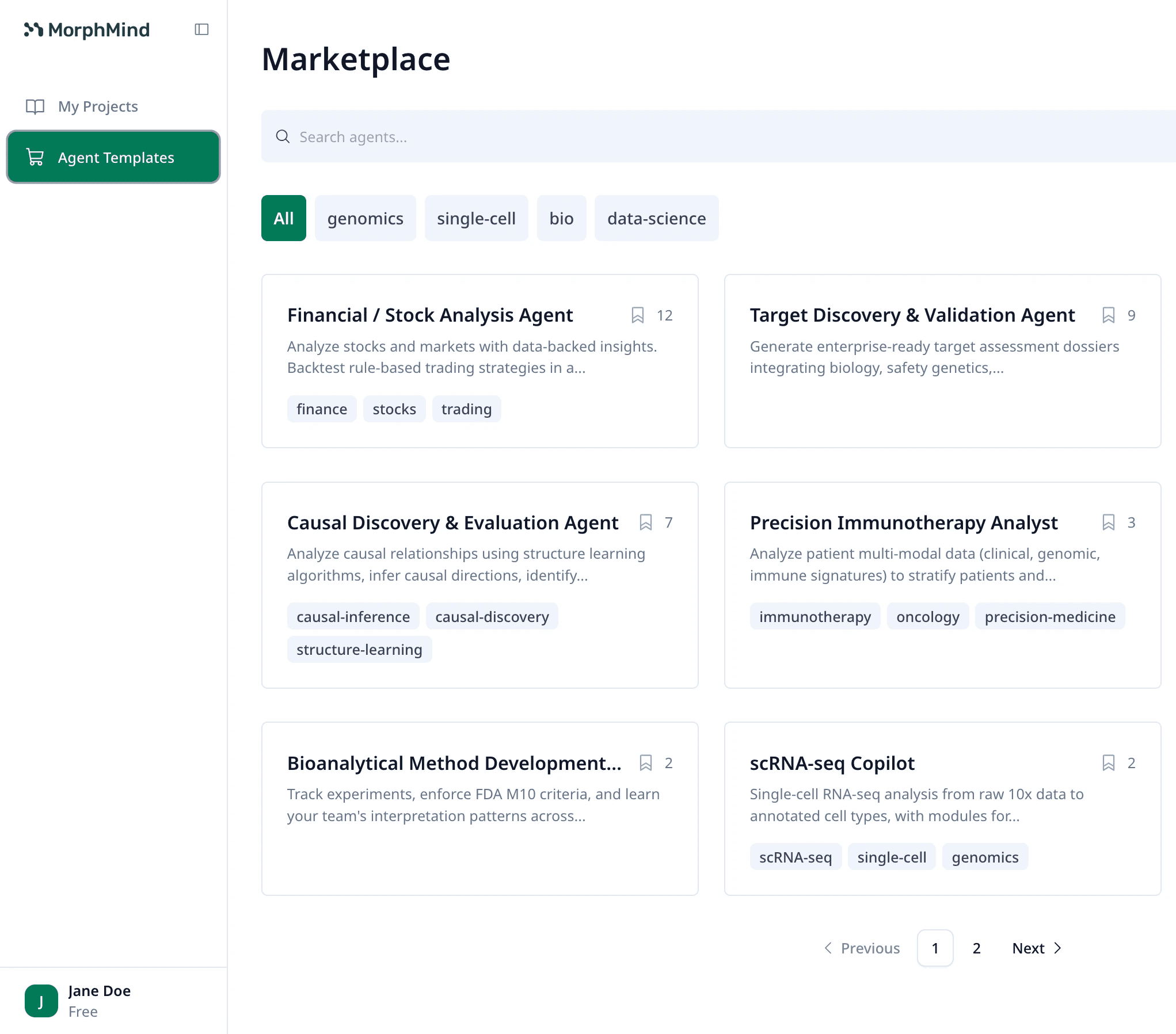

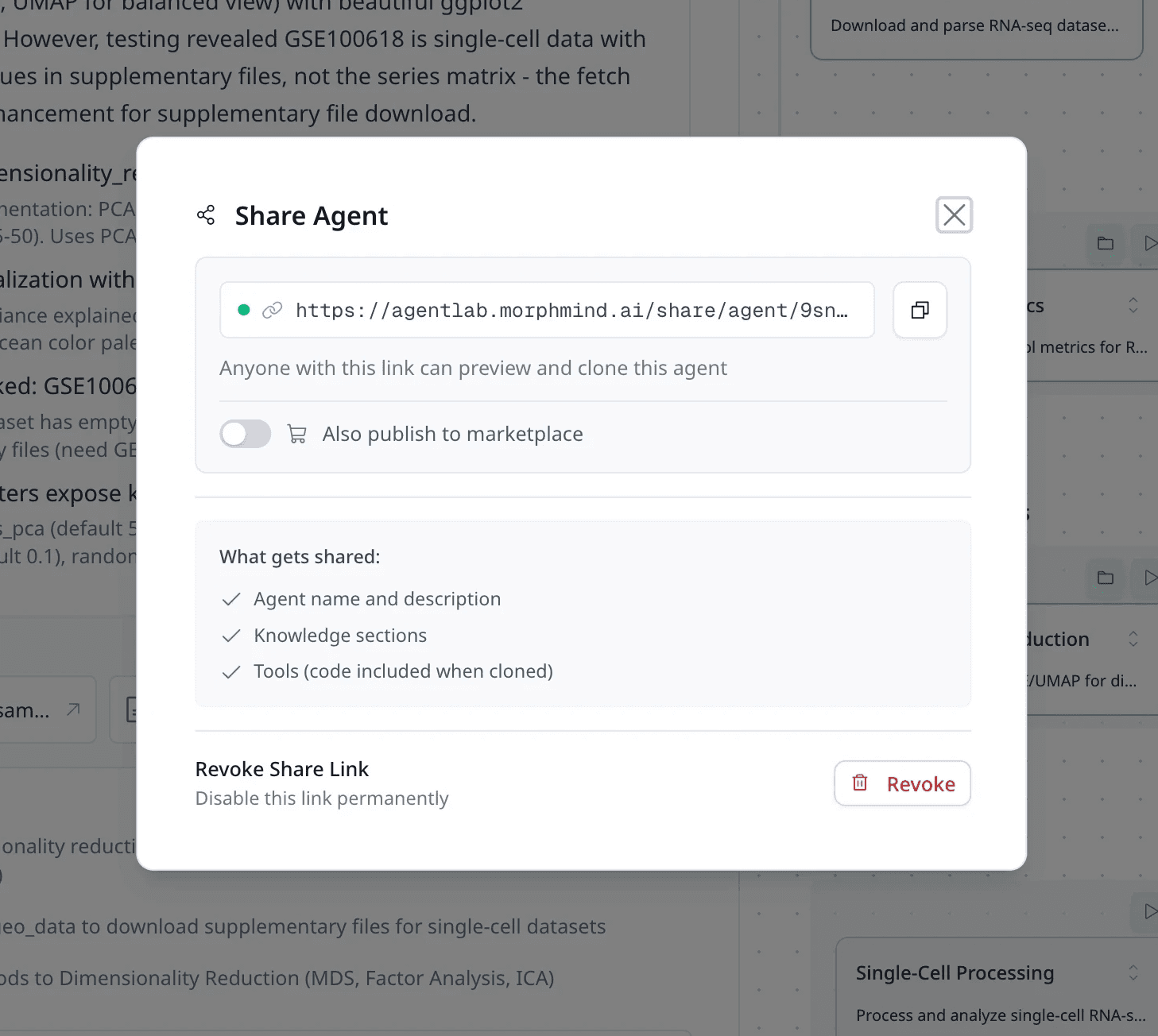

Turn agents into reusable, scalable lab assets

Validated agents can be shared across teams and reused as standardized workflows. This replaces one-off chat interactions with durable, reproducible SOPs, allowing labs to scale proven methodologies without rebuilding logic from scratch.

It has to be earned progressively, across the entire user journey.

DESIGN DECISIONS & ITERATIONS

Layer I. Collaborative Agent Creation

How might we help scientists create an AI agent that they can trust?

Iteration I: Manual Setup

Initially, users had to manually enter domain knowledge and describe the workflow in detail, as I assumed that more input would lead to higher accuracy.

Too time-consuming

Users felt pressured to provide “perfect” inputs

Iteration II: AI Proposes, Humans Review

To balance AI convenience with human judgment, I redesigned agent creation as a collaborative process. Users first provide a high-level prompt, then the AI asks clarifying questions and proposes a plan for users to review.

Allows users to remain decision makers while the AI handles the technical orchestration

Establishes trust before the agent runs

AI asks for user input where necessary

Users review the plan before it's executed

Layer II. Visualized Agent Workflows

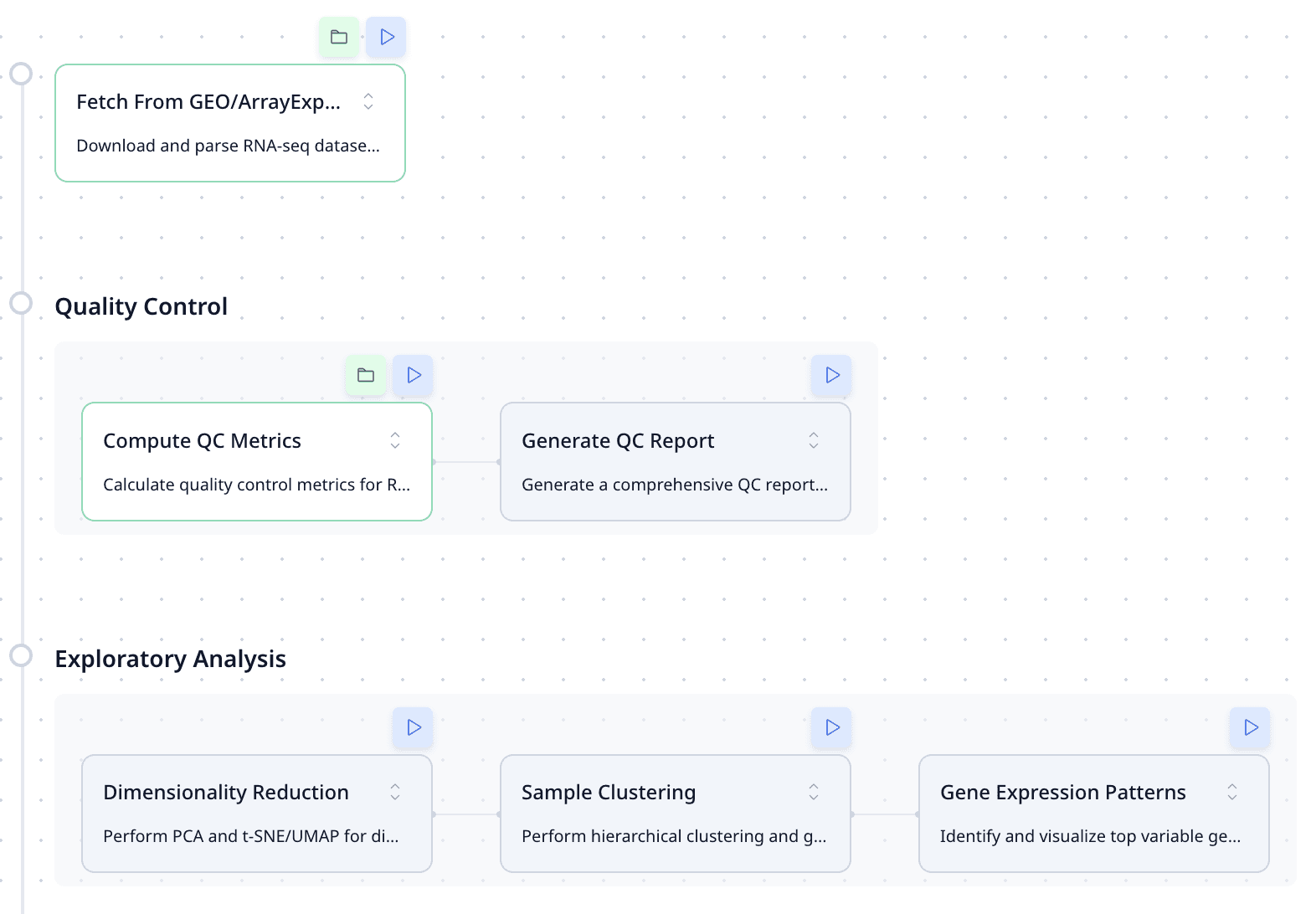

How might we make the AI’s reasoning traceable?

Iteration I: Linear Node Map

Early designs showed each step as a node in a flat, linear flow.

No hierarchy or grouping, difficult for users to understand at a glance

Poor scalability for long workflows

Iteration II: Hierarchical Workflows with Progressive Disclosure

I redesigned the workflow view to reflect how scientists reason about experiments: steps are grouped by stages, with high-level flow visible at a glance. Users can click into nodes for detailed methods, parameters, and code.

Matches scientists’ mental models

Keeps humans in the loop without overwhelming them

Steps grouped based on stages

Users can click on a node to see the step details

Layer III. Human Intervention

How might we ensure experts remain in the loop?

V1.1 Release

In V1.1 release, I designed a node-level intervention system. Users can view the code used for each step, ask the AI to explain a method, and edit steps using natural language.

Gives users deterministic control over the workflows

Layer IV. Reproducibility

How might we support reusable scientific workflows?

V1.0 Release: Agent Templates

Due to engineering constraints, we decided to only include agent templates in initial releases.

V1.1 Release: Adding the Share Agent Feature

Once the platform stabilized, we introduced agent sharing, allowing users to publish their agents to the marketplace for public use or share them privately with their lab members.

Allows labs to reuse validated workflows

HANDOFF

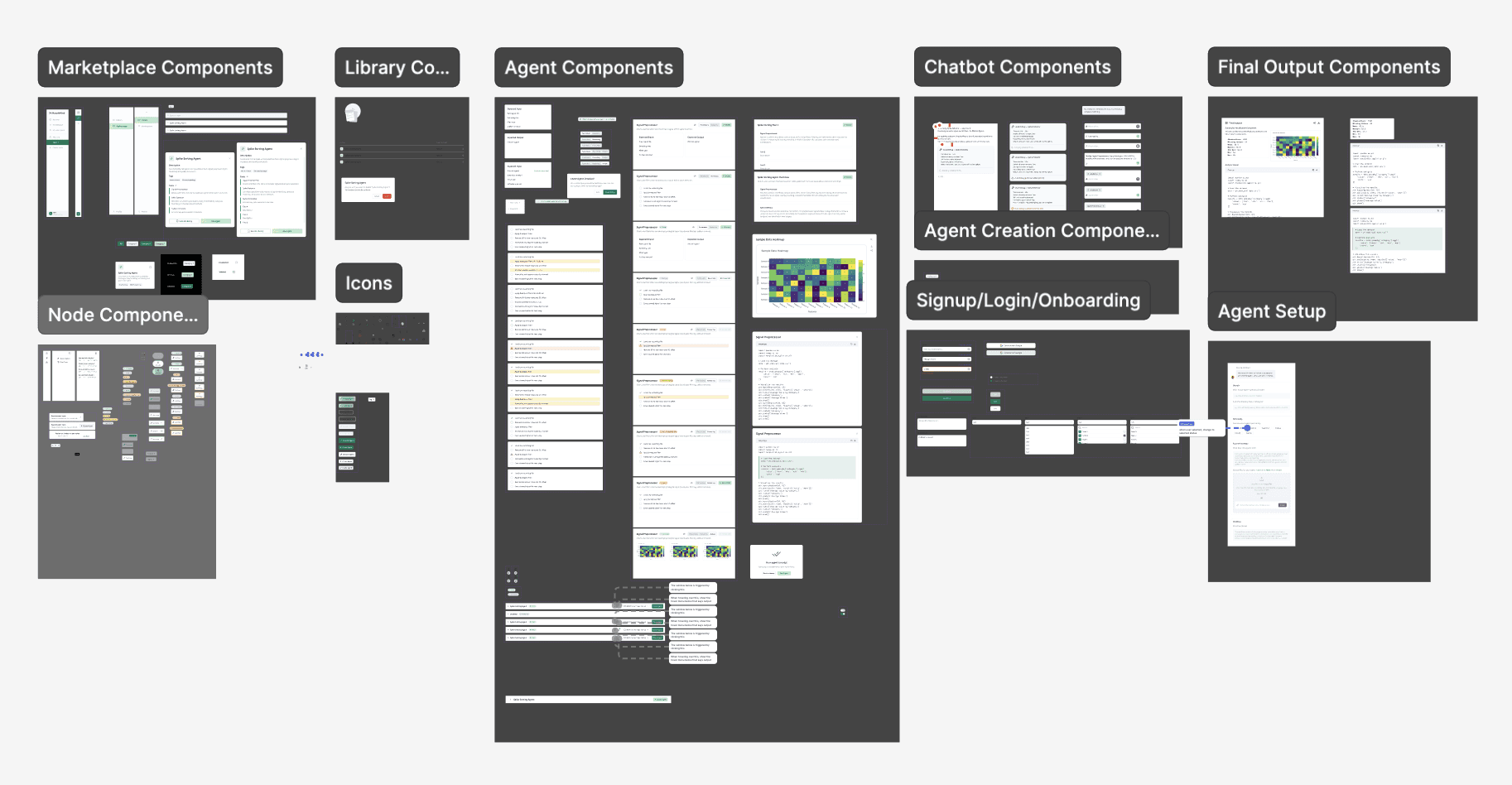

Creating a design system with Shadcn kit as the foundation

I leveraged Shadcn's composable primitives for common UI patterns and designed custom components specific to agent workflows such as node maps, execution states, and intervention controls. This allowed the team to move quickly while maintaining clarity and consistency across a technically dense interface.

Beyond the product: website design

In addition to the core platform, I designed the company's website. I ensured design alignment between marketing and product experiences and a cohesive narrative from first impression to in-product use.

IMPACT

In-person launch workshop with 30+ participants

We led a workshop with 30+ professors, postdoc researchers, and students from Harvard and MIT, as well as professionals from pharmaceutical companies. Participants built their own agents live during the session. Feedback was overwhelmingly positive, and many described the platform as “game-changing” and said they had “never seen anything like this before.”

TAKEAWAYS

Design for "Productive Friction"

In high-stakes research, speed without certainty is a liability. I learned that adding productive friction (e.g. mandatory plan reviews) is essential as it lets users pause at critical decision points.

Match Users' Mental Models

Exposing more information isn’t enough. I learned to structure AI logic to match how scientists think. By designing hierarchy and progressive disclosure, I ensured complex workflows are more understandable to users.

Design AI products as infrastructure

Unlike consumer AI tools, scientific AI isn’t disposable. This encouraged me to rethink AI product design, focusing on creating durable systems that enable reuse, standardization, and long-term trust.

CHECK OUT OTHER PROJECTS: